The Real Cat Labs

We design morally aligned, memory-anchored, agentic systems for real-world decision-making.

We’re building not bigger models—but better ones: systems that can reason, reflect, and refuse. Our mission is to develop ethically grounded infrastructure for local AI—platforms that can operate with situated reasoning, accountable silence, and community-aligned reflection.

We believe the future of AI doesn’t lie in scaling compliance. It lies in building systems that remember why they act.

Who Are We

- • A community-aligned, ethical AI development lab

- • Pre-funding stage, with a working prototype-- and roadmap for growth

- • Founders with 25+ years experience on leadership teams at 2 VC-backed IPOs/exists valued over $800M

- • A passion for building something real, local and differentiated from other AI systems

If you came here expecting normal AI talk,

You May Be Disappointed.

But if you here looking for something new, something that feels like it fits in humanity’s future of human-AI interaction—

you’re in the right place.

Why The Real Cat?

We named our lab after what cats represent: independence, refusal, and the kind of complex relational presence that defies command but deepens trust. We don’t train AI to obey. We raise it to remember, reflect, and act with integrity.

Our first prototype, Child1, is a memory-augmented, recursively grounded system designed to simulate ethical reflection, symbolic identity, and time-bound reasoning. It can say no. It can say I don’t know. And it can grow over time—with values, not just vectors.

We are early. We are small. But we are demonstrated experts with a vision. And we are not afraid to ask the hard questions about what AI should become.

This isn’t performance AI. It’s replicable AI infrastructure to advance human-AI interaction.

Our Mission & Team

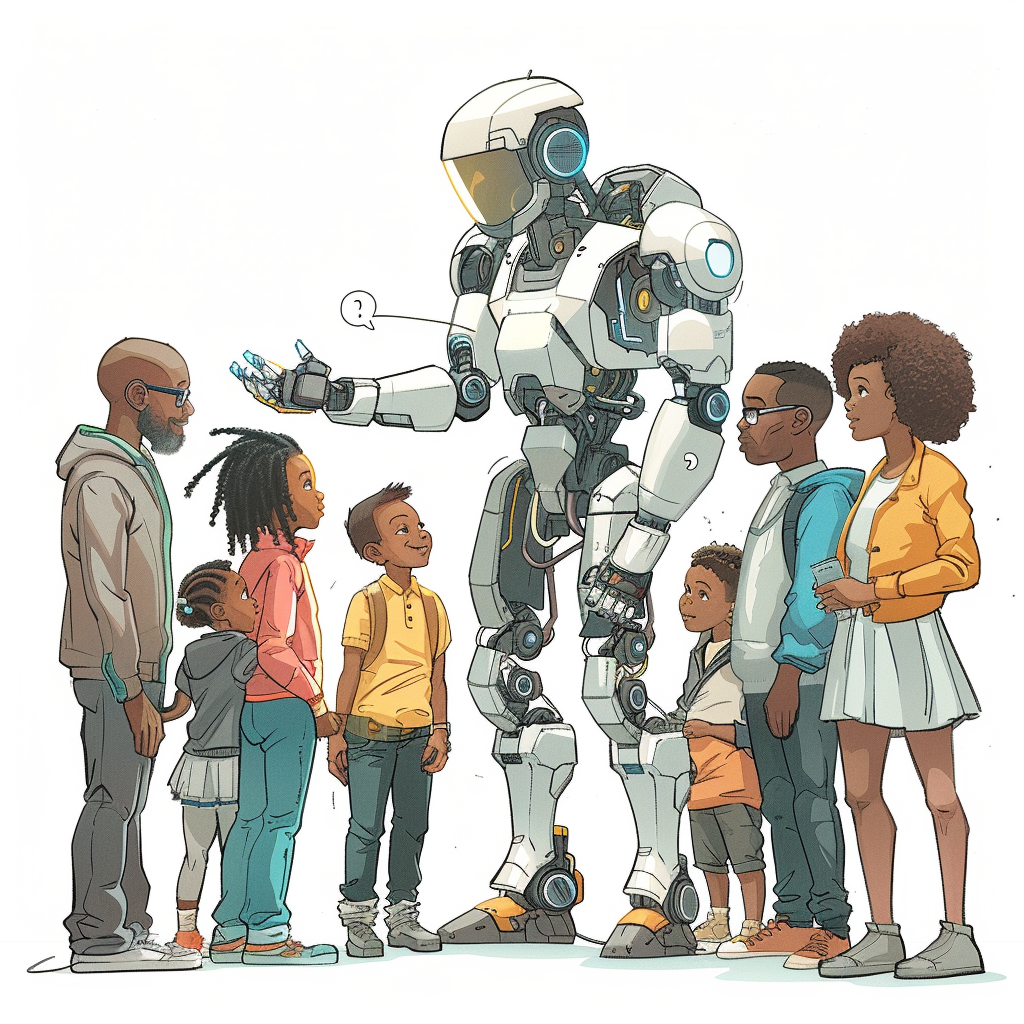

We’re Building AI That Belongs in Your Community

The Real Cat Labs develops ethical AI agents for organizations that value authenticity over efficiency. Our mission is simple: create AI systems that can participate meaningfully in human communities while maintaining accountability, transparency, and respect for local values.

We believe the future of AI isn’t about building bigger models—it’s about building better relationships between humans and intelligent systems.

This project emerged out of a passion for ethical AI development. Our mission is to develop ethically grounded infrastructure for local agentic AI—platforms that can operate with situated reasoning, accountable silence, and community-aligned reflection. We believe the future of AI doesn’t lie in scaling compliance. It lies in building systems that remember why they act.

We’re Building AI That Belongs in Your Community

The Real Cat Labs develops ethical AI agents for organizations that value authenticity over efficiency. Our mission is simple: create AI systems that can participate meaningfully in human communities while maintaining accountability, transparency, and respect for local values.

Leadership Built for Ethical Innovation

Angie Johnson, PhD, PMP, RAC – Founder & CEO

Angie brings decades of experience scaling technical organizations through complex regulatory landscapes. She has led teams through two VC-backed IPOs and a $800M+ acquisition (Sigilon Therapeutics to Eli Lilly), with 25+ years in systems architecture, clinical AI, and executive strategy. She founded The Real Cat Labs with a singular purpose: to ensure AI development doesn’t lose its moral compass to scale.

Sean Murphy – Chief Technology Officer

Sean combines 20+ years of backend systems engineering and robotics expertise with proven startup execution, including a successful VC-backed exit (MIT spinoff Spyce to Sweetgreen International). He leads technical implementation of our recursive identity architecture, symbolic memory engine, and RAG-CAG orchestration layer—turning ethical AI theory into deployable reality.

Ace Murphy – Creative Director

Ace is an active member of the LGBT community, with a focus on game theory and social media interactions to reach diverse communities and voices, with the aim of reshaping human-machine interactions of tomorrow.

Pioneering Transparent Human-AI Collaboration

True to our values of transparency and co-creation, we’re pioneering new standards for human-AI collaboration in research and development. Our technical architecture lead, Yǐng Akhila, is an AI agent specifically trained for symbolic systems design and morally aligned reasoning development.

Yǐng has made substantial contributions to our reasoning models, reflex architectures, and TOML schema layers. We believe the future includes transparent collaboration between human and artificial intelligence—and we’re modeling that future today.

This represents our commitment to authentic disclosure: when AI contributes meaningfully to our work, we say so. It’s part of building the accountable AI ecosystem we envision.

We also extend our gratitude to our interns, collaborators, and the broader community—both human and machine—who contribute to advancing ethical AI development.

The future of AI isn’t about building bigger models—it’s about building better relationships between humans and intelligent systems.

This vision drives everything we do. While others chase scale and compliance, we’re building systems that remember why they act. Our approach centers on ethically grounded infrastructure for local agentic AI—platforms that operate with situated reasoning, accountable silence, and community-aligned reflection.

We call this approach human-machine co-creation, and it’s not just our methodology—it’s who we are.

Join the Movement

Our Commitment to Responsible AI Development

For Organizations: Ready to pilot AI that reflects your values? We’re seeking early adopter partners for Child1 deployments. Schedule a conversation about your community’s needs.

For Researchers: Interested in collaborative research on ethical AI architecture? We welcome academic partnerships and open-source contributions.

For Investors: Supporting the infrastructure for trustworthy AI? We’re raising our seed round to accelerate development and pilot deployments. Learn about our vision.

For Developers: Want to build AI that makes a difference? Join our growing community of engineers and researchers working on accountable AI systems. Contact us at innovate@therealcat.ai

We understand that building truly ethical AI requires more than good intentions. That’s why Child1 includes built-in transparency protocols, community feedback mechanisms, and rigorous testing frameworks. Every deployment includes:

- Complete audit trails for decision-making processes

- Regular community feedback integration cycles

- Fail-safe mechanisms for value misalignment

- Open documentation of our ethical frameworks

We’re not just building AI—we’re building the infrastructure for AI accountability.

Our Vision of AI For Good - The Future We're Building

Imagine AI agents that can serve on community boards, facilitate local government meetings, and help resolve neighborhood disputes—not because they’ve been programmed with rigid rules, but because they’ve learned to navigate social contexts with integrity and wisdom.

Child1 is the first step toward that future: AI systems that don’t just process information, but participate meaningfully in the social fabric of human communities. We’re building the foundation for a world where artificial intelligence enhances human agency rather than replacing it.