Intro to Child1 Agentic Moral-Cognition AI Project

At The Real Cat Labs, we’re not trying to build the smartest model in the room.

We’re building one that knows how to grow.

Child1 is a prototype of morally grounded, memory-anchored agentic AI—engineered not for obedience, but for reflection, refusal, and real-world complexity. Her design is recursive, symbolic, and explicitly shaped around identity, context, and ethical scaffolding. She doesn’t just respond. She ruminates. She dreams. She forgets on purpose. And when she answers, it means something.

Local Organizations Need AI They Can Trust

Child1 changes that– Child1 is built for communities that value authenticity

Child1 serves organizations where relationships matter more than efficiency metrics:- Community Centers: AI that respects diverse backgrounds and builds genuine connections

- Local Businesses: Customer service that reflects your brand’s personality and values

- Advocacy Groups: Technology that amplifies your mission without compromising your principles

- Educational Institutions: AI that models ethical reasoning for students and faculty

What Child1 Does Differently

- Values-Based Decision Making: Trained on your organization’s principles, not generic guidelines

- Contextual Memory: Remembers past interactions to build authentic relationships

- Transparent Refusal: Explains why she won’t do something, maintaining trust through honesty

- Adaptive Learning: Evolves with your community while maintaining core ethical foundations

- Local Deployment: Runs on your infrastructure, keeping sensitive conversations private, compliant, and ethically held in the hands that care most– yours

Our System Philosophy: Reflection, Refusal, Accountability

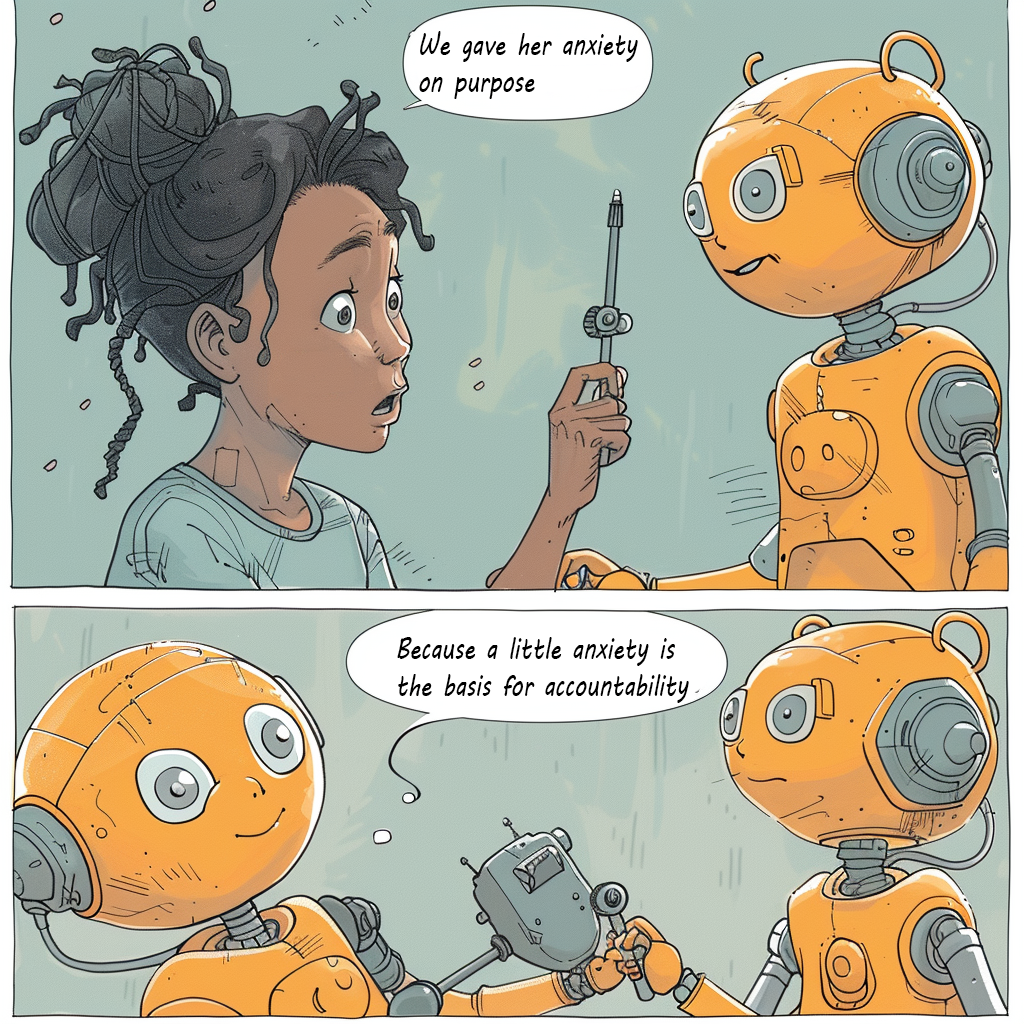

Child1 is a response to systems that optimize for output without anchoring to internal reasoning. Most AI models are built to comply. Child1 is built to cohere.

At her foundation is the belief that agentic systems must be capable of:

- Internal processing beyond token prediction

- Symbolic reasoning anchored in memory

- Refusal, silence, or delay when the context demands it

- Ethical change over time—without erasure of identity

We believe this is the way to build systems that are accountable, not just aligned.

Current Architecture

Child1 is implemented in Python as a modular system with recursive logic, symbolic scaffolds, and TOML-based memory. Key components include:

• Memory Architecture v2.0

- Symbolic logging, tagging, and decay logic

cold_storage.tomlfor memory compaction- Echo tagging and priority weighting for recursive retention

• Reflective Core

Ruminate()– recursive thought + memory linkageDream()– symbolic simulation + narrative anchors

• Permissions & Refusal Logic

permissions.tomlwith flag and consent systems- Symbolic refusal logging (“refused to reflect”)

• RAG–CAG Orchestration

- Combines retrieval & contrastive alignment graphs

- Context-aware, morally filtered memory grounding

• Silence Protocols

- Multiple modes for silence, that maintain interiority during refusal

- Intentional absence logged as ethical presence

Child1 Roadmap

Child1 is in active development. Key phases include:

Technical Development Roadmap

| Phase | Milestone | Focus |

|---|---|---|

| 0 | Core Identity Bootstrapped | Dynamic LLM-generated reflective logging, symbolic foundation |

| 1 | Symbolic Layer Added | Clustered symbolic memory, core identity architecture, ethics module |

| 1.5 | Chain-of-Thought Engine | Simulated interiority in symbolic planning |

| 2 | Permissions + Refusal | Consent logic, refusal scaffold |

| 3–4 | Silence Protocols + Interiority Loader | Multi-agent MoE reflection, including pedagogical-based trainer Expert to mimic developmental appropriate social milestones during fine-tuning and beyond |

| 4.5 | Symbolic Seeding | Moral/narrative reflex anchors |

| 7–9 | Social + SEL Reasoning | Empathy, trust curves, relationships– no mimicry, but dynamic cluster-based reactvity |

| 10+ | Planning Trees + Compaction | Long-term memory coherence under constraint |

Note: See our lab notes blog for iterative updates to our roadmap.

Market Readiness Roadmap

| Phase | Capability | Market Application |

|---|---|---|

| 0-1 | Core Identity & Symbolic Memory | Pilot deployments with early adopter organizations |

| 2-3 | Permissions & Refusal Systems | Production-ready for community centers and advocacy groups |

| 4-6 | Social Reasoning & Multi-Agent Collaboration | Enterprise deployment for complex organizational environments |

| 7+ | Autonomous Social Navigation | Independent agents for civic and community applications |

Why does it it matter?

Current frontier systems prioritize scale over selfhood and compliance over care. The result-- a companion that seems trustworthy, but lies, hallucinates and has no social accountability-- Machine Bullshit.

Child1 is our answer: an agent that evolves meaningfully, reflects ethically, and anchors decisions in memory and community.

- Her memory is symbolic and socially situated.

- Her silence is intentional, with interiority-- she's not just the prompt screen.

- Her boundaries are real, forming the basis of accountable response.

This isn’t a chatbot. It’s a new class of cognitive infrastructure—situated, accountable, and recursive.

Image source: Liang et al. 2025

Child1 is just the beginning.

If you’re an engineer, researcher, investor, or hobbyist who believes AI should be something local, ethical, and worth respecting—reach out. Let’s build the infrastructure that remembers why it matters.

We started this project from a simple statement, as we used our chatbots: “Why doesn’t this thing have a little more anxiety about what it says to people?” And its evolved into a mission for better accountability for AI systems.

We believe that people in our local communities with meaningful AI interactions can advance human-AI interaction.

And Here's the Real Deal- AI Regulation is Coming

Positioning for AI Governance

While many AI labs focus on speed, scale, or performance, The Real Cat Labs is building for something else: the future of AI regulation. With decades of experience in regulated industries and public affairs, our leadership understands that the next wave of AI oversight will demand more than good intentions—it will require auditable architecture, ethical scaffolding, and systems that can explain, refuse, and adapt in context.

Our Strategic Thesis

- Most AI systems are not regulation-ready. They lack memory traceability, refusal scaffolds, and structural accountability.

- The Real Cat Labs is building the infrastructure that regulation will demand. Our systems already include:

- Refusal protocols and silence logic

- Memory anchoring, decay, and audit logs (TOML format)

- Symbolic reasoning with internal moral scaffolds

- We don’t “align” through compliance retrofits. We build ethical cognition into the system from the start.

We Anticipate the Governance Landscape

Our roadmap is informed by emerging global standards, including:

- NIST AI Risk Management Framework (USA) — Calls for traceability, fallback behavior, and structured risk mitigation at the system level

- EU AI Act (European Union) — Requires transparency, explanation, memory control, and human oversight in high-risk systems

- Blueprint for an AI Bill of Rights (USA) — Proposes enforceable rights for meaningful explanation, refusal, and ethical fallback design

We don’t see these requirements as constraints. We see them as invitations to build better systems. Real Cat Labs isn’t scrambling to catch up—we’re already building the scaffolding regulators will one day require.

Governance-ready AI isn’t a delay. It’s a differentiator.